5.3.3 Behaviorism and Functionalism

LEARNING OBJECTIVES

By the end of this section you will discover:

- How behaviorism, in its several forms, can support the idea of physicalism.

- How functionalism avoids some of the problems of behaviorism while still supporting physicalism.

- How thought puzzles like “philosophical zombies” and “thinking machines” and the “Chinese Room” challenge behaviorism and functionalism.

There is a very different physicalist tradition of attempting to understand the mind that can help us make progress at this point. But again, let’s start at the beginning.

In the early 20th century, inspired by early developments in scientific psychology, and animated by a desire to speed up the pace of such progress, a number of philosophers (and psychologists) developed an approach to the mind called behaviorism. Instead of looking inside the human body to discover the physical realization of the mind, behaviorists thought that our best hope was to look outside the human body, at our environment and our behavior, at external stimuli and physical responses.

How to Train a Brain: Crash Course Psychology #11

There are three different, though related, ideas that people have meant by ‘behaviorism,’ as nicely summarized by contemporary philosopher George Graham in the Stanford Encyclopedia of Philosophy:

Methodological behaviorism is a normative theory about the scientific conduct of psychology. It claims that psychology should concern itself with the behavior of organisms (human and nonhuman animals). Psychology should not concern itself with mental states or events or with constructing internal information processing accounts of behavior. According to methodological behaviorism, reference to mental states, such as an animal’s beliefs or desires, adds nothing to what psychology can and should understand about the sources of behavior. Mental states are private entities that, given the necessary publicity of science, do not form proper objects of empirical study. Methodological behaviorism is a dominant theme in the writings of John Watson (1878–1958).

Psychological behaviorism is a research program within psychology. It purports to explain human and animal behavior in terms of external physical stimuli, responses, learning histories, and (for certain types of behavior) reinforcements. Psychological behaviorism is present in the work of Ivan Pavlov (1849–1936), Edward Thorndike (1874–1949), as well as Watson. Its fullest and most influential expression is B. F. Skinner’s work on schedules of reinforcement.

To illustrate, consider a hungry rat in an experimental chamber. If a particular movement, such as pressing a lever when a light is on, is followed by the presentation of food, then the likelihood of the rat’s pressing the lever when hungry, again, and the light is on, is increased. Such presentations are reinforcements, such lights are (discriminative) stimuli, such lever pressings are responses, and such trials or associations are learning histories.

Analytical or logical behaviorism is a theory within philosophy about the meaning or semantics of mental terms or concepts. It says that the very idea of a mental state or condition is the idea of a behavioral disposition or family of behavioral tendencies, evident in how a person behaves in one situation rather than another. When we attribute a belief, for example, to someone, we are not saying that he or she is in a particular internal state or condition. Instead, we are characterizing the person in terms of what he or she might do in particular situations or environmental interactions. Analytical behaviorism may be found in the work of Gilbert Ryle (1900–76) (SEP, “Behaviorism,” Section 2).

We can see immediately how behaviorism could support physicalism/materialism. If mental states/events are behaviors/tendencies to behave, and behaviors are understood as empirically observable physical movements, utterances, etc., then behaviorism ends up implying that the mind can be fully explained without appealing to anything non-physical. But again, the claim is not that the mind is the brain or any other internal bit of physiology: mental states/events, instead, are just our behaviors/behavioral tendencies.

What physical/material property do I share with the Martian of Lewis’ example, in virtue of which we both experience pain? Here is something we can now say: pain, just like every mental state/event, is a matter of responding in certain ways to certain stimuli. The Martian and I, despite having different physiologies, are both disposed to respond in similar ways (e.g., we “groan and writhe”) to similar stimuli (e.g., people pinching our skin.) That is the physical/material property we share in virtue of which we both experience pain.

Behaviorism has faced a number of objections, of course. Several of those objections are objections to all versions of physicalism/materialism, and we will discuss some of those below. But certain objections are unique to behaviorism. Here is Graham again:

The deepest and most complex reason for behaviorism’s decline in influence is its commitment to the thesis that behavior can be explained without reference to non-behavioral and inner mental (cognitive, representational, or interpretative) activity… For many critics of behaviorism, it seems obvious that, at a minimum, the occurrence and character of behavior (especially human behavior) do not depend primarily upon an individual’s reinforcement history [i.e. how they’ve been conditioned to respond to certain stimuli], although that is a factor, but rely on the fact that the environment or learning history is represented by an individual and how … it is represented. The fact that the environment is represented by me constrains or informs the functional or causal relations that hold between my behavior and the environment and may, from an anti-behaviorist perspective, partially disengage my behavior from its conditioning or reinforcement history. No matter, for example, how tirelessly and repeatedly I have been reinforced for pointing to or eating ice cream, such a history is impotent if I just don’t see a potential stimulus as ice cream or represent it to myself as ice cream or if I desire to hide the fact that something is ice cream from others. My conditioning history, narrowly understood as unrepresented by me, is behaviorally less important than the environment or my learning history as represented or interpreted by me (ibid., SEP, “Behaviorism,” Section 7).

This problem is a big one. The behaviorist idea that we can regard all internal states of a person as a “black box,” and that we can explain the mind without them, seems far too hopeful. The intuitive, commonsense examples just listed are worrisome, but the worries don’t stop there. Some have argued that behaviorist tools (reinforcement, conditioning, etc.) cannot explain a number of children’s linguistic abilities. And the more we learn about the brain (e.g., brain trauma/lesions can impact us mentally in many specific ways that are independent of our “learning history”) the more it seems like it really does influence behavior in ways other than what can be explained in purely behavioristic terms.

To avoid these problems while retaining behaviorism’s unique perspective that mental states/events are what they do, a number of philosophers have come to embrace functionalism.

Functionalism agrees with behaviorism that mental states/events are individuated (i.e., distinguished from one another) by their causes and effects. But functionalism disagrees with behaviorism in many other ways. Behaviorism claims that, in our explanations of mental states/events, we can only make reference to publicly observable stimuli and responses (and not, for example, to brain states/events.) Functionalism makes no such claim. According to functionalism, the causes and effects which individuate a particular mental state/event can be internal, external, or both. Further, according to functionalism, we can use other mental states/events in describing the essential causal role of the mental state we’re trying to understand.

But what do these distinctions look like in practice? For example, think about the confusion that you’re feeling right now (probably). How could (a) a behaviorist or (b) a functionalist individuate that mental state/event? Both (a) and (b) would likely note that this is a kind of mental state that’s caused by reading about the philosophy of mind (external cause), and both (a) and (b) would likely note that this kind of mental state is likely to make you stroke your chin, raise an eyebrow, go back to re-read the previous page, and etc. (external effects). But the functionalist can also mention things like the fact that this confusion will probably cause you to reason about philosophical issues, and to remember this feeling when you think about whether to major in philosophy and to believe that philosophy of mind can get complicated, etc. (internal, mental effects).

Behaviorism can make such claims, too, but eventually, they’re going to have to explain any such mentalistic terms (reasoning, remembering, believing) in fully behavioral terms. Functionalism doesn’t make that a requirement. “Functionalism is the doctrine that what makes something a thought, desire, pain (or any other type of mental state) depends not on its internal constitution, but solely on its function, or the role it plays, in the cognitive system of which it is a part. More precisely, functionalist theories take the identity of a mental state to be determined by its causal relations to sensory stimulations, other mental states, and behavior” (SEP, “Functionalism,” Section 1).

Like behaviorism, functionalism can escape multiple realizability worries. But functionalism is also less clearly materialistic/physicalist than behaviorism. Here is how contemporary philosopher Janet Levin summarizes the complications here:

Though functionalism is officially neutral between materialism and dualism, it has been particularly attractive to materialists, since many materialists believe…that it is overwhelmingly likely that any states capable of playing the roles in question will be physical states. If so, then functionalism can stand as a materialistic alternative to the [type-type identity theory] …which holds that each type of mental state is identical to a particular type of neural state (ibid.).

In this way, even functionalism can provide us with a material/physical picture of the mind. Like behaviorism, it would be a very different species of materialism/physicalism from that provided by the most straightforward version of materialism/physicalism, i.e., type-type identity theory. And it will be very difficult in practice to specify the complete causal role of each kind of mental state (exercise: try to specify the complete causal role of just one mental state!), but – the functionalist might respond – that’s a philosophical-cum-psychological project that we should all want to help complete.

There are two major objections to functionalism, and both target the same aspect of these views. Functionalism and behaviorism are alike in thinking that a mental state/event is what it does, i.e. they both claim that the entire nature of a mental state is determined by its (necessary or typical) causes and effects. That is to say, both approaches treat the mental state in question as a “black box”: what’s in the box doesn’t matter; all that matters is which outputs the box produces given the inputs it receives.

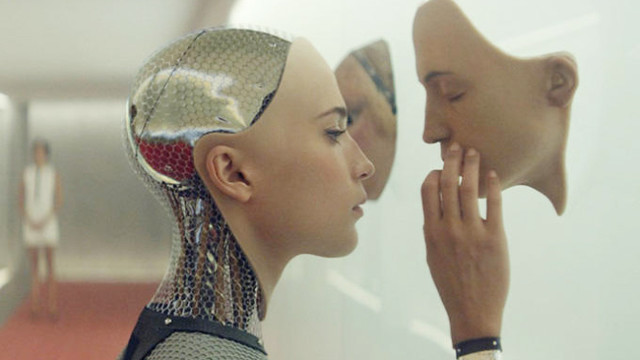

One of the implications of this approach, then, is that if two states/events have exactly the same inputs and outputs, then they are identical in their mental properties. But, for reasons we will discuss in more detail below, that seems wrong. For example, it seems like “philosophical zombies” are possible. In philosophical discussion, philosophical zombies are imaginary creatures that behave precisely as we do, but who – by hypothesis – don’t have certain mental states/events/properties, e.g., consciousness. (See Ned Block, 1981, “Psychologism and Behaviorism,” Philosophical Review, 90: 5–43 and David Chalmers’, 1997, The Conscious Mind (OUP) for seminal early discussions of philosophical zombies.) That is to say, a state’s playing a certain causal role doesn’t seem like all there is to at least some mental states. Even if “chatbots” can now mimic a genuine conversation partner, nobody thinks that today’s chatbots literally have minds…right?

In 1950, twentieth-century mathematician, codebreaker, and philosopher, Alan Turing proposed a much-discussed test, a way for us to tell whether machines can think (and so whether they could be said to have minds.)

Alan Turing: Crash Course Computer Science #15

Contemporary philosopher Larry Hauser summarizes Turing’s proposal here:

“While we don’t know what thought or intelligence is, essentially, and while we’re very far from agreed on what things do and don’t have it, almost everyone agrees that humans think, and agrees with Descartes that our intelligence is amply manifest in our speech. Along these lines, Alan Turing suggested that if computers showed human-level conversational abilities we should, by that, be amply assured of their intelligence. Turing proposed a specific conversational test for human-level intelligence, the “Turing test” as it has come to be called. Turing himself characterizes this test in terms of an “imitation game” …whose original version “is played by three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart from the other two. … The object of the game for the interrogator is to determine which of the other two is the man and which is the woman. The interrogator is allowed to put questions to A and B [by teletype to avoid visual and auditory clues]. … . It is A’s object in the game to try and cause C to make the wrong identification. … The object of the game for the third player (B) is to help the interrogator.” Turing continues, “We may now ask the question, `What will happen when a machine takes the part of A in this game?’ Will the interrogator decide wrongly as often when the game is being played like this as he does when the game is played between a man and a woman? These questions replace our original, `Can machines think?’” (Hauser, IEP, “Artificial Intelligence,” Section 2).

The idea, roughly, is that if people cannot reliably tell whether they’re conversing with another person or with a machine, then we should conclude that that machine is intelligent, at least to some degree.

This behavioristic proposal has come in for some serious objections – some of which are discussed on this very page– but it (along with Turing’s other groundbreaking work in computing) has also spurred some very productive and exciting work, not only in philosophy but in computer science as well. There are long-standing competitions (see e.g., the Loebner Prize) in which people try to design software that can actually pass the Turing Test. You might wish to do some web searching for more information about this topic – what you’ll find is pretty amazing!

There are two kinds of mental properties in particular that have given rise to these kinds of worries: consciousness and intentionality. We will address consciousness at great length below. Intentionality is “aboutness.” For example, when I believe that the sky is blue, my belief is about the sky. When I fear the reaper, my fear is directed upon, or about, the reaper. And so on. Intentionality is a feature of many – maybe all, as philosopher Franz Brentano and others have argued (Brentano, Franz, 2002, “The Distinction Between Mental and Physical Phenomena”, Philosophy of Mind: Classical and Contemporary Readings (Chalmers ed.), Oxford University Press: 479-484) – mental states. So, any satisfactory materialist/physicalist philosophy of mind will have to be able to explain what this property is, and how to explain it in material/physical terms.

Functionalism and behaviorism, as noted, seem to imply that anything that plays the same causal role as a mental state will be like that mental state in all relevant mental respects. But contemporary philosopher John Searle has presented an especially influential objection to that claim, an objection usually called the Chinese Room thought experiment:

One way to test any theory of the mind is to ask oneself what it would be like if my mind actually worked on the principles that the theory says all minds work on. … Suppose that I’m locked in a room and given a large batch of Chinese writing. Suppose furthermore (as is indeed the case) that I know no Chinese, either written or spoken, and that I’m not even confident that I could recognize Chinese writing as Chinese writing distinct from, say, Japanese writing or meaningless squiggles. To me, Chinese writing is just so many meaningless squiggles. Now suppose further that after this first batch of Chinese writing, I am given a second batch of Chinese script together with a set of rules for correlating the second batch with the first batch. The rules are in English, and I understand these rules as well as any other native speaker of English. They enable me to correlate one set of formal symbols with another set of formal symbols, and all that “formal ” means here is that I can identify the symbols entirely by their shapes. Now suppose also that I am given a third batch of Chinese symbols together with some instructions, again in English, that enable me to correlate elements of this third batch with the first two batches, and these rules instruct me how to give back certain Chinese symbols with certain sorts of shapes in response to certain sorts of shapes given me in the third batch. Unknown to me, the people who are giving me all of these symbols call the first batch “a script, ‘ they call the second batch a “story, ” and they call the third batch “questions.” Furthermore, they call the symbols I give them back in response to the third batch “answers to the questions,” and the set of rules in English that they gave me, they call “the program.” Now just to complicate the story a little, imagine that these people also give me stories in English, which I understand, and they then ask me questions in English about these stories, and I give them back answers in English. Suppose also that after a while I get so good at following the instructions for manipulating the Chinese symbols and the programmers get so good at writing the programs that from the external point of view – that is, from the point of view of somebody outside the room in which I am locked – my answers to the questions are absolutely indistinguishable from those of native Chinese speakers. Nobody just looking at my answers can tell that I don’t speak a word of Chinese. Let us also suppose that my answers to the English questions are, as they no doubt would be, indistinguishable from those of other native English speakers, for the simple reason that I am a native English speaker. From the external point of view – from the point of view of someone reading my “answers” – the answers to the Chinese questions and the English questions are equally good. But in the Chinese case, unlike the English case, I produce the answers by manipulating uninterpreted formal symbols. As far as the Chinese is concerned, I simply behave like a computer; I perform computational operations on formally specified elements. For the purposes of the Chinese, I am simply an instantiation of the computer program (Searle, “Minds, Brains, and Programs,” 1980: pp. 417-418).

According to Searle, in this case, he does not actually understand Chinese, even though he can now, in effect, take Chinese questions as inputs, and can quickly produce sensible Chinese answers as outputs. The implication here is that just because this room/person/machine can take the same inputs, and produce the same outputs, as the mental states involved in actually having a certain mental state (in this case, the mental states involved in understanding Chinese), that doesn’t show that the room/person/machine actually has the relevant mental states.

As Searle puts it in the passage above, he’s just “manipulating uninterpreted formal symbols.” He has no idea what those symbols mean. And so, in that sense, the room/person/machine lacks intentionality. So, functionalism and behaviorism seem to miss the mark. Their accounts of what makes a mental state what it is cannot account for what e.g., Brentano has called “the mark of the mental,” i.e., their aboutness, their intentionality (See also Tim Crane, “Intentionality as the Mark of the Mental,” Royal Institute of Philosophy Supplement, 1998).

So, does Searle’s argument imply that machines cannot think? If so, isn’t his view bound to fall afoul of multiple realizability considerations? Here’s how Searle responds to this general kind of concern:

“”Could a machine think?” My own view is that only a machine could think, and indeed only very special kinds of machines, namely brains and machines that had the same causal powers as brains. And that is the main reason strong AI [“according to strong AI, the computer is not merely a tool in the study of the mind; rather, the appropriately programmed computer really is a mind, in the sense that computers given the right programs can be literally said to understand and have other cognitive states” ibid, p. 417] has had little to tell us about thinking since it has nothing to tell us about machines. By its own definition, it is about programs, and programs are not machines. Whatever else intentionality is, it is a biological phenomenon, and it is as likely to be as causally dependent on the specific biochemistry of its origins as lactation, photosynthesis, or any other biological phenomena. No one would suppose that we could produce milk and sugar by running a computer simulation of the formal sequences in lactation and photosynthesis, but where the mind is concerned many people are willing to believe in such a miracle because of a deep and abiding dualism: the mind they suppose is a matter of formal processes and is independent of quite specific material causes in the way that milk and sugar are not” (ibid, p. 424).

Searle, then, is objecting to a certain kind of computer-enthusiastic functionalism/behaviorism on materialist/physicalist grounds. However, it is that our mental states have/acquire intentionality, it looks like it cannot just be the fact that our brain states have the kinds of inputs and outputs that computer programs (currently) do. We’ll consider materialist/physicalist theories of intentionality and mental content in more detail later.

Works Cited

CrashCourse. Alan Turing: Crash Course Computer Science #15. YouTube, YouTube, 17 June 2017, https://www.youtube.com/watch?v=7TycxwFmdB0. Accessed 12 Apr. 2022.

CrashCourse. How to Train a Brain: Crash Course Psychology #11. YouTube, YouTube, 21 Apr. 2014, https://www.youtube.com/watch?v=qG2SwE_6uVM&t=11s. Accessed 12 Apr. 2022.

Graham, George. “Behaviorism.” Stanford Encyclopedia of Philosophy, Stanford University, 19 Mar. 2019, https://plato.stanford.edu/entries/behaviorism/#ThreTypeBeha.

Hauser. “Artificial Intelligence.” Internet Encyclopedia of Philosophy, https://iep.utm.edu/art-inte/#H2.

Kanijoman. “Ex-Machina-Movie.” Flickr, Flickr, 22 Aug. 2015, https://www.flickr.com/photos/23925401@N06/20167701293. Accessed 12 Apr. 2022.

Levin, Janet. “Functionalism.” Stanford Encyclopedia of Philosophy, Stanford University, 20 July 2018, https://plato.stanford.edu/entries/functionalism/#WhaFun.