4.1: PREAGRICULTURAL HUMANS

Diet

Higher primates, including humans, may be the species with the longest list of nutritional requirements (Bogin 1991). This is likely due to the fact that we evolved in environments where there was a high diversity of species but low densities of any given species. Humans require 45–50 essential nutrients for growth, maintenance, and repair of cells and tissues. These include protein, carbohydrates, fats, vitamins, minerals, and water. As a species, we are (or were) highly active with high metabolic demands. Humans are not autotrophic. We cannot manufacture our own nutrients. Doing so is metabolically expensive, meaning it takes a lot of energy to accomplish it. If the surrounding environment can provide those nutrients, it makes evolutionary sense to obtain them from outside the body, rather than spending energy producing them, as green plant species do (National Geographic Society n.d.). Given our nutritional requirements and caloric needs, it is not surprising that humans are omnivorous and evolved to choose foods that are dense in essential nutrients. One of the ways we identified high-calorie resources in our evolutionary past was through taste, and it is no accident that humans find sweet, salty, fatty foods appealing.

The human predisposition toward sugar, salt, and fat is innate (Farb and Armelagos 1980). This is reflected in receptors for sweetness found in every one of the mouth’s ten thousand taste buds (Moss 2013). This tuning toward sweet makes sense in an ancestral environment where sweetness signaled high-value resources like ripe fruits. Likewise, “the long evolutionary path from sea-dwelling creatures to modern humans has given us salty body fluids, the exact salinity of which must be maintained” (Farb and Armelagos 1980), drawing us to salty-tasting things. Cravings for fat, another high-calorie resource, are also inborn, with some archaeological evidence suggesting that hominins may have been collecting bones for their fatty marrow, which contains two essential fatty acids necessary for brain development (Richards 2002), rather than for any meat remaining on the surface (Bogin 1991).

Archaeological and bone chemistry studies of preagricultural populations indicate that Paleolithic peoples ate a wider variety of foods than many people eat today (Armelagos et al. 2005; Bogin 1991; Larsen 2014; Marciniak and Perry 2017). Foragers took in more protein, less fat, much more fiber, and far less sodium than modern humans typically do (Eaton et al. 1988). Changes in tooth and intestinal morphology illustrate that animal products were an important part of human diets from the time of Homo erectus onward (Baltic and Boskovic 2015; Richards 2002; Wrangham 2009). These animal products consisted of raw meat scavenged from carnivore kills and marrow from the leftover bones. It is not possible to discern from current archaeological evidence when cooking began. The “cooking hypothesis” proposed by Richard Wrangham (2009) argues that H. erectus was adapted to a diet of cooked food, and phylogenetic studies comparing body mass, molar size, and other characteristics among nonhuman and human primates support this conclusion (Organ et al. 2011). However, the first documented archaeological evidence of human-controlled use of fire is dated to one million years ago, roughly a million years after the first appearance of H. erectus (Berna et al. 2012). Whenever cooking became established, it opened up a wider variety of both plant and animal resources to humans and led to selection for gene variants linked to reductions in the musculature of the jaw and thickness of tooth enamel (Lucock et al. 2014). However, the protein, carbohydrates, and fats preagricultural peoples ate were much different from those we eat today. Wild game, for example, lacked the antibiotics, growth hormones, and high levels of cholesterol and saturated fat associated with industrialized meat production today (Walker et al. 2005). It was also protein dense, providing only 50% of energy as fat (Lucock et al. 2014), and not prepared in ways that increase cancer risk, as modern meats often are (Baltic and Boskovic 2015).

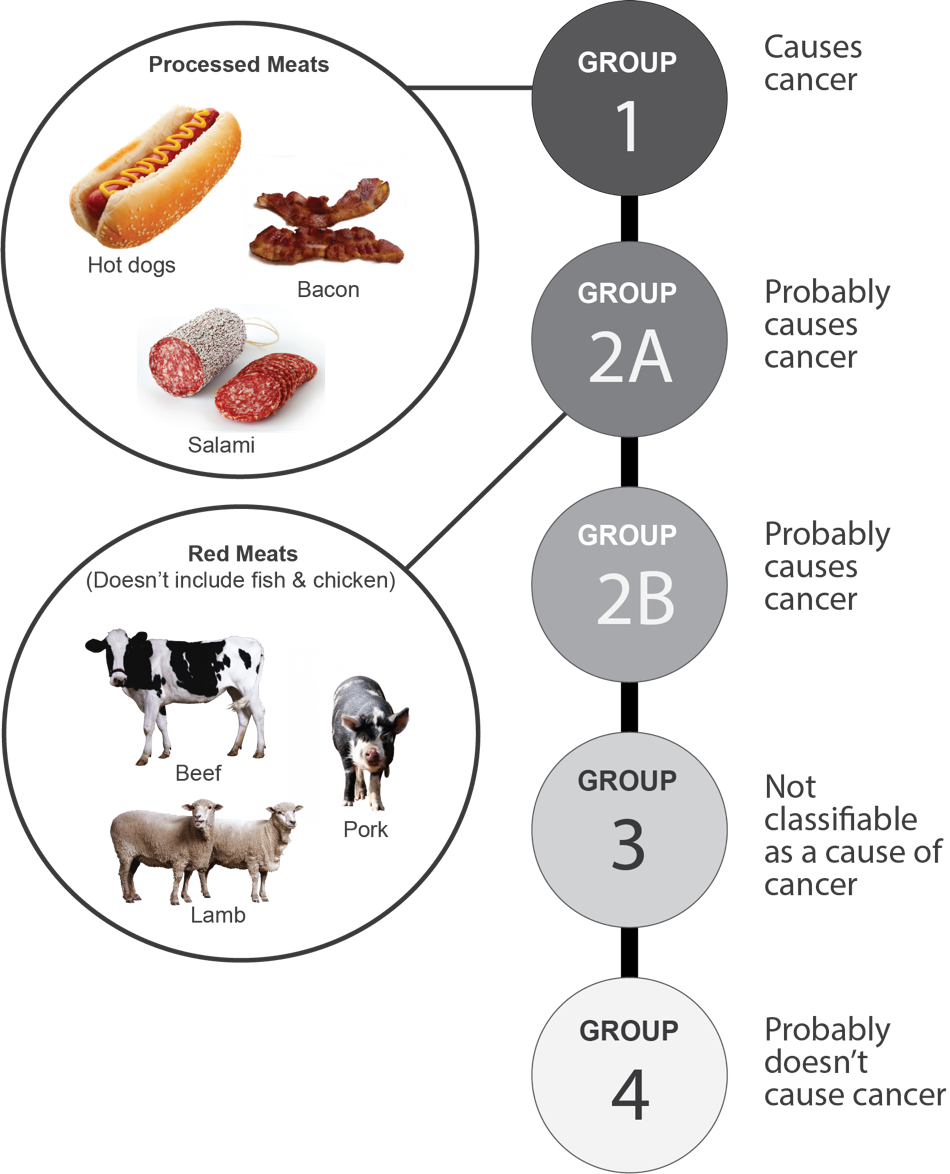

Meats cooked well done over high heat and/or over an open flame, including hamburgers and barbecued meats, are highly carcinogenic due to compounds formed during the cooking process (Trafialek and Kolanowski 2014). Processed meats that have been preserved by smoking, curing, salting, or by adding chemical preservatives such as sodium nitrite (e.g., ham, bacon, pastrami, salami) have been linked to cancers of the colon, lung, and prostate (Abid et al. 2014) (See Figure 16.1). Nitrites/nitrates have additionally been linked to cancers of the ovaries, stomach, esophagus, bladder, pancreas, and thyroid (Abid et al. 2014). In addition, studies analyzing the diets of 103,000 Americans for up to 16 years indicate that those who ate grilled, broiled, or roasted meats more than 15 times per month were 17% more likely to develop high blood pressure than those who ate meat fewer than four times per month, and participants who preferred their meats well done were 15% more likely to suffer from hypertension than those who preferred their meats rare (Liu 2018). A previous study of the same cohort indicated “independent of consumption amount, open-flame and/or high-temperature cooking for both red meat and chicken is associated with an increased risk of Type 2 diabetes among adults who consume animal flesh regularly” (Liu et al. 2018). Although cooking, especially of meat (Wrangham 2009), has been argued to be crucial to cognitive and physical development among hominins, there has clearly been an evolutionary trade-off between the ability to preserve protein and the health risks humans experience due to consumption of cooked meat and exposure to chemical preservatives.

Although carbohydrates represent half of the diet on average for both ancient foragers and modern humans, the types of carbohydrates are very different. Ancient foragers ate fresh fruits, vegetables, grasses, legumes, and tubers, rather than the processed carbohydrates common in industrialized economies (Moss 2013). Their diets also lacked the refined white sugar and corn syrup found in many modern foods that, in themselves, contribute to the development of Metabolic Syndrome and diabetes (Pontzer et al. 2012).

Physical Activity Patterns

How do we know how active our ancestors really were? Hominin morphology and physiology provide us with clues. Consider our ancestral environment of sub-Saharan Africa. It was hot and dry, and close to the equator, meaning the sun was brighter and at a more direct angle than it is for many human populations today. This made the transition to bipedalism very important to survival. When the sun is at its highest in the sky, a bipedal human exposes only 7% of its surface area to maximal radiation, approximately one-third of the maximally exposed area of a similarly sized quadruped (Lieberman 2015). In a savannah environment where predators roamed in the cool of the night, it would have been evolutionarily advantageous for small, bipedal hominins to forage in the heat of the day, especially in an open habitat without the safety of trees. As early hominins were primarily scavengers who likely needed to travel to find food, heat-dissipating mechanisms would have been strongly favored.

Humans have four derived sets of adaptations for preventing hyperthermia (overheating): (1) fur loss and an increased ability to sweat (versus panting); (2) an external nose, allowing for nasal regulation of the temperature and humidity of air entering the lungs; (3) enhanced ability to cool the brain; and (4) an elongated, upright body. These adaptations suggest an evolutionary history of regular, strenuous physical activity. Some scholars have gone so far as to argue that, beginning with H. erectus, there are skeletal markers of adaptations for endurance running (Lieberman 2015; Richards 2002). The relevant morphological changes include modifications in the arches of the feet, a longer Achilles tendon, a nuchal ligament and ear canals that help maintain balance while running, shoulders decoupled from the head allowing rotation of the torso independently from the pelvis and head, and changes to the gluteus maximus. It is argued these modifications would have provided benefits for running, but not walking, and that H. erectus may have been running prey to the point of exhaustion before closing in for the kill (Lieberman 2015). This conclusion is controversial, with other scholars pointing to a lack of evidence for the necessary cognitive and projectile-making abilities among the genus Homo that far back in time (Pickering and Bunn 2007). Whether our ancestors were walking or running, they were definitely engaged in significant amounts of physical activity on a daily basis. They had to be or they would not have survived. As Robert Malina and Bertis Little (2008) point out, prolonged exertion and motor skills (e.g., muscular strength, tool making, and, eventually, accuracy with projectiles) are important determinants of success and survival in preindustrial societies.

Research with modern foraging populations, although controversial, can also offer clues to ancient activity patterns. Criticisms of such research include sampling bias due to the fact that modern foragers occupy marginal habitats and that such societies have been greatly influenced by their association with more powerful agricultural societies. Modern foragers may also represent an entirely new human niche that appeared only with climatic changes and faunal depletion at the end of the last major glaciation (Marlowe 2005). Despite these issues, the ethnographic record of foragers provides the only direct observations of human behavior in the absence of agriculture (Lee 2013). From such studies, we know hunter-gatherers cover greater distances in single-day foraging bouts than other living primates, and these treks require high levels of cardiovascular endurance (Raichlen and Alexander 2014). Recent research with the Hadza in Tanzania, one of the last remaining foraging populations, indicates that they walk up to 11 kilometers (6.8 miles) daily while hunting or in search of gathered foods (Pontzer et al. 2012), engaging in moderate-to-vigorous physical activity for over two hours each day—meeting the U.S. government’s weekly requirements for physical activity in just two days (Raichlen et al. 2016) (See Figure 16.2). The fact that humans were physically active in our evolutionary past is also supported by the fact that regular physical exercise has been shown to be protective against a variety of health conditions found in modern humans, including cardiovascular disease (Raichlen and Alexander 2014) and Alzheimer’s dementia (Mandsager et al. 2018), even in the presence of brain pathologies indicative of cognitive decline (Buchman et al. 2019).

Infectious Disease

Population size and density remained low throughout the Paleolithic, limiting morbidity and mortality from infectious diseases, which sometimes require large populations to sustain epidemics. Our earliest ancestors had primarily two types of infections to contend with (Armelagos 1990). The first were organisms that adapted to our prehominin ancestors and have been problems ever since. Examples include head lice, pinworms, and yaws. A second set of diseases were zoonoses, diseases that originate in animals and mutate into a form infectious to humans. A contemporary example is the Human Immunodeficiency Virus (HIV) that originated in non-human primates and was likely passed to humans through the butchering of hunted primates for food (Sharp and Hahn 2011). Zoonoses that could have infected ancient hunter-gatherers include tetanus and vector-borne diseases transmitted by flies, mosquitoes, fleas, midges, and ticks. Many of these diseases are slow acting, chronic, or latent, meaning they can last for weeks, months, or even decades, causing low levels of sickness and allowing victims to infect others over long periods of time. Survival or cure does not result in lasting immunity with survivors returning to the pool of potential victims. Such diseases often survive in animal reservoirs, reinfecting humans again and again (Wolfe et al. 2012). A recent study of bloodsucking insects preserved in samples of amber dating from 15 to 100 million years ago indicate they carried microorganisms that today cause diseases such as filariasis, sleeping sickness, river blindness, typhus, Lyme disease, and malaria (Poinar 2018). Such diseases may have been infecting humans throughout our evolutionary history, and they may have had significant social and economic impacts on small foraging communities because they more often infected adults, who provided the food supply (Armelagos et al. 2005).

Health Profiles

Given their diets, levels of physical activity, and low population densities, the health profiles of preagricultural humans were likely better than those of many modern populations. This assertion is supported by comparative research conducted with modern foraging and industrialized populations. Measures of health taken from 20th-century foraging populations demonstrate excellent aerobic capacity, as measured by oxygen uptake during exertion, and low body-fat percentages, with triceps skinfold measurements half those of white Canadians and Americans. Serum cholesterol levels were also low, and markers for diabetes, hypertension, and cardiovascular disease were missing among them (Eaton et al. 1988; Raichlen et al. 2016). Life expectancies among our ancient ancestors are difficult to determine, but an analysis of living foragers by Michael Gurven and Hillard Kaplan (2007:331) proposed that, “for groups living without access to modern healthcare, public sanitation, immunizations, or an adequate or predictable food supply, at least one-fourth of the population is likely to live as grandparents for 15–20 years.” Based on their analysis, the maximum lifespan among our ancestors was likely seventy years of age, just two years less than average global life expectancy in 2016 (WHO 2018a).